Optimizing your website’s structure is crucial for improving how search engines crawl and index your pages. By following a few essential technical SEO tips, you can ensure that your site is easily accessible to both users and search engines. This article covers various strategies to enhance your website’s performance, making it more efficient and user-friendly.

Key Takeaways

- Make sure your site has a clear structure to help search engines find all pages easily.

- Use descriptive keywords in your URLs to improve search engine rankings.

- Regularly check for broken links and fix them to enhance user experience.

- Create an XML sitemap and submit it to search engines for better indexing.

- Utilize internal links wisely to guide users and search engines to important content.

Optimizing Google Crawl Efficiency

To ensure that search engines can effectively find and index your website, it’s crucial to optimize for crawl efficiency. This involves several key strategies that can significantly enhance your site’s visibility.

Minimizing Duplicate Content

- Identify and remove duplicate pages to prevent confusion for search engines.

- Use canonical tags to indicate the preferred version of a page.

- Regularly audit your content to ensure uniqueness.

Using Robots Meta Tags

- Implement robots meta tags to control which pages search engines can crawl.

- Use

noindextags for pages that should not appear in search results. - Ensure that important pages are accessible to crawlers.

Creating an XML Sitemap

An XML sitemap acts as a roadmap for search engines, helping them discover your pages more efficiently. Here’s how to create one:

- List all important URLs on your site.

- Submit the sitemap to Google Search Console.

- Update the sitemap regularly as you add or remove pages.

A well-structured XML sitemap is essential for guiding search engines through your website’s content, ensuring that all important pages are indexed properly.

By following these strategies, you can significantly improve your site’s crawl efficiency, making it easier for search engines to index your content effectively. Remember, optimizing your site structure is a vital part of your overall SEO strategy, as highlighted in proven strategies for SEO optimization.

Enhancing Internal Linking Structure

Importance of Internal Links

Internal links are crucial for guiding both users and search engines through your website. A well-structured internal link system helps improve user experience and boosts your site’s SEO. Here are some key points to consider:

- They help distribute page authority across your site.

- They make it easier for search engines to crawl your pages.

- They provide context for users about what to expect on linked pages.

Using Descriptive Anchor Text

When creating internal links, use descriptive anchor text. This means the text should clearly indicate what the linked page is about. Avoid vague phrases like “click here.” Instead, use phrases that describe the content, such as “learn more about our services.”

Regularly Auditing Internal Links

It’s important to regularly check your internal links to ensure they are working properly. Broken links can frustrate users and hurt your SEO. Here’s how to keep your links in check:

- Use tools like Google Search Console to find broken links.

- Update or remove any links that lead to non-existent pages.

- Ensure that all links point to relevant and useful content.

Regular audits of your internal linking structure can lead to better site performance and improved user satisfaction.

By enhancing your internal linking structure, you can create a more organized and user-friendly website that is easier for search engines to crawl. This will ultimately lead to better rankings and a more satisfying experience for your visitors.

Effective URL Optimization Strategies

Simplifying URL Structure

A clear and simple URL structure is essential for both users and search engines. Short and descriptive URLs help search engines understand the content of the page better. Here are some tips:

- Use relevant keywords in your URLs.

- Avoid unnecessary parameters and numbers.

- Keep URLs as short as possible while still being descriptive.

Using Descriptive Keywords

Incorporating descriptive keywords in your URLs can significantly improve your SEO. This makes it easier for search engines to index your pages and for users to understand what the page is about. For example:

- Instead of

www.example.com/12345, usewww.example.com/technical-seo-tips. - This not only helps with SEO but also makes the URL more user-friendly.

Avoiding Excessive Subfolders

Having too many subfolders can complicate your URL structure and make it harder for search engines to crawl your site. Here are some guidelines:

- Limit the number of subfolders to two or three.

- Use clear and meaningful names for each folder.

- Avoid using special characters or numbers that don’t convey meaning.

Optimizing your URLs is a crucial step in enhancing your website’s overall performance. By focusing on clarity and relevance, you can improve both user experience and search engine visibility.

By following these strategies, you can create a more effective URL structure that benefits both your users and your SEO efforts. Remember, a well-optimized URL is a key part of a successful website!

Improving Site Speed for Better Crawling

Utilizing Cache Plugins

Using cache plugins can significantly speed up your site. These plugins store a static version of your website, allowing returning users to load pages faster. Here are some benefits of cache plugins:

- Reduces load time for repeat visitors.

- Decreases server load.

- Improves overall user experience.

Implementing Asynchronous Loading

Asynchronous loading allows your website to load scripts without blocking the display of content. This means that while scripts are loading, users can still see and interact with the page. Here’s how to implement it:

- Place the

asyncattribute in your script tags:<script async src="script.js"></script>. - Ensure that critical content loads first.

- Test your site to confirm improved load times.

Monitoring Site Speed Regularly

Regularly checking your site speed is crucial for maintaining optimal performance. Use tools like Google PageSpeed Insights to:

- Identify slow-loading pages.

- Get recommendations for improvements.

- Track changes over time to ensure your optimizations are effective.

Regular monitoring helps you catch issues before they affect user experience and search rankings.

By focusing on these strategies, you can enhance your website’s speed, leading to better crawling and improved search engine rankings. Remember, website speed optimization is key to keeping users engaged and satisfied!

Maximizing Crawl Budget

Removing Duplicate Pages

To make the most of your crawl budget, it’s important to remove duplicate pages. Duplicate content can confuse search engines and waste valuable crawling resources. Here are some steps to follow:

- Identify duplicate pages using tools like Screaming Frog.

- Use canonical tags to point to the main version of the content.

- Delete or redirect unnecessary duplicates.

Fixing Broken Links

Broken links can lead to a poor user experience and waste your crawl budget. To fix broken links:

- Regularly check for broken links using tools like Google Search Console.

- Redirect broken links to relevant pages.

- Remove links to pages that no longer exist.

Ensuring CSS and JavaScript Crawlability

Search engines need to access your CSS and JavaScript files to understand your site fully. To ensure they are crawlable:

- Use the robots.txt file to allow access to these files.

- Test your site with Google’s Mobile-Friendly Test to check for issues.

- Regularly monitor your site’s performance to catch any crawlability issues early.

Keeping your site clean and organized helps search engines find and index your important pages more efficiently. This is key to maximizing your crawl budget and improving your site’s visibility.

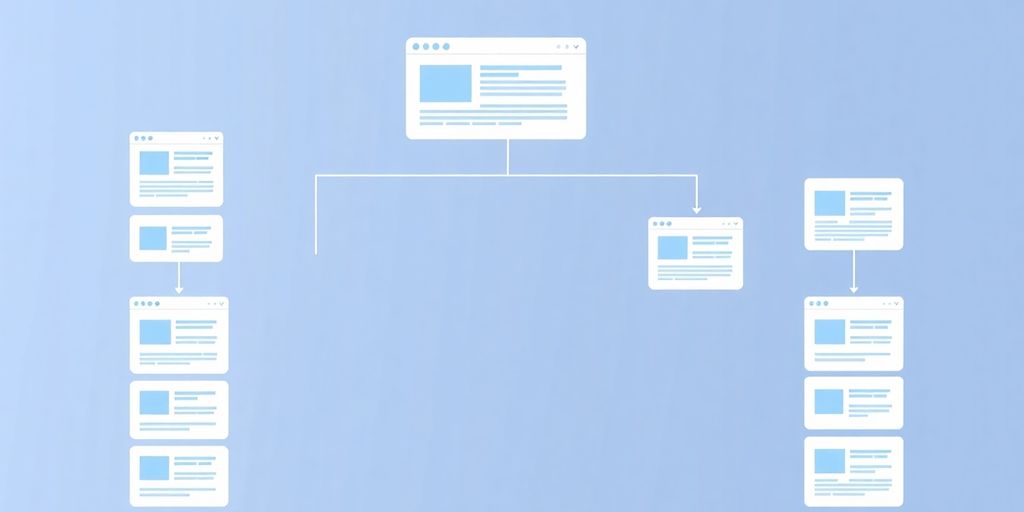

Setting Up a Robust Site Architecture

Creating a strong site architecture is essential for both users and search engines. A well-organized site helps search engines find and index your pages more efficiently. Here are some key strategies to consider:

Grouping Related Pages

- Organize pages logically: Keep similar content together. For example, link your blog homepage to individual posts and author pages.

- Use categories and tags: This helps in grouping related content, making it easier for users to navigate.

- Limit clicks: Ensure that important pages are no more than three clicks away from the homepage.

Prioritizing Important Pages

- Identify key pages: Determine which pages are most important for your business, like product or contact pages.

- Use internal links wisely: The more links pointing to a page, the more important it appears to search engines.

- Regularly update links: Make sure that your internal links reflect any changes in your site structure.

Using Breadcrumb Menus

- Implement breadcrumb navigation: This helps users understand their location within your site.

- Enhance user experience: Breadcrumbs make it easier for visitors to backtrack and explore related content.

- Improve SEO: Search engines can better understand the structure of your site through breadcrumbs.

A well-structured site not only improves user experience but also enhances your site’s visibility in search results.

By following these strategies, you can create a robust site architecture that benefits both users and search engines, ultimately leading to better crawling and indexing.

Utilizing Technical SEO Configurations

Technical SEO is all about making sure your website is set up correctly so that search engines can find and understand it. Here are some key areas to focus on:

Understanding HTTP Status Codes

HTTP status codes tell you how a page is doing when someone tries to access it. Here are some important ones:

- 200 OK: The page is found and can be indexed.

- 301 Moved Permanently: This means the page has moved to a new URL, and its SEO value is passed to the new page.

- 404 Not Found: This indicates that the page doesn’t exist, which can hurt your site’s crawl budget.

Optimizing Robots.txt and Meta Robots Tags

These tools help control what search engines can see on your site. Here’s how to use them:

- Robots.txt: This file tells search engines which pages to ignore.

- Meta Robots Tags: These tags can be added to individual pages to control indexing.

- Canonical Tags: Use these to prevent duplicate content issues by pointing to the main version of a page.

Implementing Structured Data

Structured data helps search engines understand your content better. Here’s why it’s important:

- It can improve your visibility in search results.

- It helps search engines display rich snippets, which can attract more clicks.

- Structured data can enhance user experience by providing more context about your content.

Understanding these technical aspects is crucial for improving your website’s performance and visibility. Technical SEO is essential for guiding search engines to your most important pages.

To make the most of your website, it’s important to use technical SEO settings. These tools help your site show up better in search results, making it easier for people to find you. Ready to create a fantastic website that stands out? Visit us at Pikuto and start your journey today!

Conclusion

In summary, optimizing your website’s structure is crucial for better crawling and indexing by search engines. By following the tips we’ve discussed, like improving your internal links, using clear URLs, and ensuring your site loads quickly, you can help search engines find and understand your content more easily. This not only boosts your chances of ranking higher in search results but also enhances the experience for your visitors. Remember, a well-structured site is key to attracting more traffic and keeping users engaged. So take the time to implement these strategies, and watch your website thrive!